July 26, 2012 - Comments Off on The Many Eyes of the Internet

The Many Eyes of the Internet

Another month, another Google Tech Talk. I first have to gratuitously thank Google for these great free talks. The quality and variety of speakers is truly astounding. This month's talk was The Distributed Camera: Modeling the World from Online Photos , where speaker Noah Snavely went over the work he and his team have done at Cornell involving 3D reconstruction of scenes using crowd-sourced photos. The project will be quite familiar to anyone who saw the TED talk on Microsoft's Photosynth awhile back. However, being a technical talk, Snavely roughed out how his team's feature recognition algorithm worked.

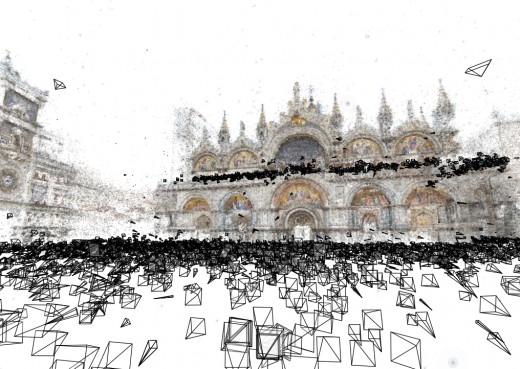

In effect, the idea is to grab hundreds to thousands of photos from sites like Flickr of a single landmark. An algorithm then defines features within each photo that are unique using a keypoint detector technology called SIFT. Each photo is then compared to every other photo for similar features. Now that the feature points have been matches between photo, the algorithm can begin solving for the camera position and angle computing the 3D point from the 2D projection provided in each photo.

The algorithm does not use any camera GPS or time data since both can be quite inaccurate depending on the conditions under which the photo was taken (bad GPS signal, indoors v. outdoors, etc.). The output of all this hard work is a 3D point cloud where each camera is shown as a small pyramid representing the camera position and angle (if you want more technical details on the algorithm see Snavely's paper here).

One can easily see the benefit of such technologies. If you watch the TED talk on Photosynth you'll see how it can allow for a Google Maps-like zoom of landmarks by using the multitude of different photos taken by people for different angles and levels of detail. Moreover it gives a fairly accurate 3D model of a building. Such a model can be used for many tasks. For instance imagine automatically updating street views, associating new photos with existing models, or even annotation. However, the system isn't quite perfect.

How could such a model cover the uninteresting and banal parts of cities when the number of photos is small to nonexistent? Snavely's solution was to turn the task of generating this data into a crowd-sourced game as has proven so successful in recent projects (think FoldIt). The inaugural competition between the University of Washington and Cornell led to a narrow Cornell victory--after they discovered how to game the system by taking extreme closeups of buildings generating extra 3D, and thereby in-game, points. There are also other sorts of data one could potentially pull including satellite imagery, blueprints, and more.

Another major weakness is inherent to the algorithm itself; since the computer simply compares similar features, any building with symmetry can lead to egregious errors. For example, given a dome with eight-fold symmetry the algorithm can mistakenly think each of the eight sides is the same, duplicating and rotating all the camera positions and points about the dome! Such a short coming can be overcome by giving the algorithm a basic understanding of symmetry making matching less greedy, possibly by comparing multiple features in each photo to see if an angle is different or not.

Lastly the algorithm is slow, O(n2m2), where n is the number of photos and m is the average number of features per photo, by my estimates (though I haven't computed big-O in years so don't take my word for it). Snavely admitted that even using up to 300 machines, it can take them days to process a couple thousand of photos.

The talk was incredibly interesting and informative. Such technologies leverage crowd-sourcing as a natural extension of our new data-infused world. As the amount of data out there continues to go beyond our abilities to sort it into meaningful models such automated systems will become increasingly important. I'd like to thank Google and Snavely again for giving us a peak into this fascinating future.

Published by: benchirlin in The Design Mechanism, The Internal Mechanism

Tags: Google Tech Talk, photography, technology, TED